NVIDIA CONVERGED ACCELERATORS

Where powerful performance, enhanced networking, and robust security come together—in one package.

Faster, More Secure AI systems

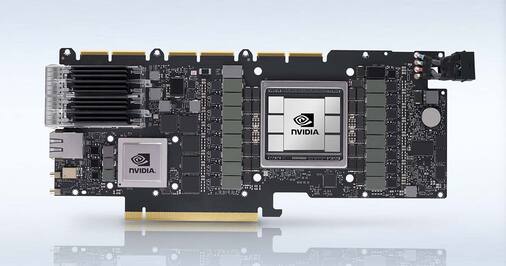

In one unique, efficient architecture, NVIDIA converged accelerators combine the powerful performance of NVIDIA GPUs with the enhanced network and security of NVIDIA smart network interface cards (SmartNICs) and data processing units (DPUs). Deliver maximum performance and enhanced security for I/O intensive GPU accelerated workloads, from the data center to the edge

Unprecedented GPU Performance NVIDIA Tensor Core GPUs deliver unprecedented performance and scalability for AI, high-performance computing (HPC), data analytics, and other compute-intensive workloads. And with Multi-Instance GPU (MIG), each GPU can be partitioned into multiple GPU instances—fully isolated and secured at the hardware level. Systems can be configured to offer right-sized GPU acceleration for optimal utilization and sharing across applications big and small in both bare-metal and virtualized environments.

|

Enhanced Networking and Security NVIDIA® ConnectX® family of smart network interface cards (SmartNICs offer best-in-class network performance, advanced hardware offloads, and accelerations. NVIDIA BlueField® DPUs combine the performance of ConnectX with full infrastructure-on-chip programmability. By offloading, accelerating, and isolating networking, storage, and security services, BlueField DPUs provide a secure, accelerated infrastructure for any workload in any environment.

|

A New Level of Data EfficiencNVIDIA converged accelerators include an integrated PCIe switch, allowing data to travel between the GPU and network without flowing across the server PCIe system. This enables a new level of data center performance, efficiency and security for IO-intensive, GPU-accelerated workloads.

|

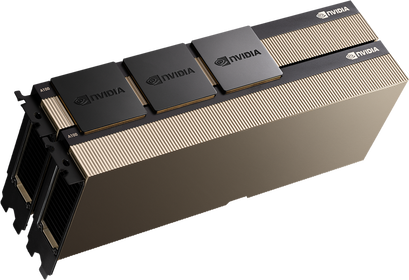

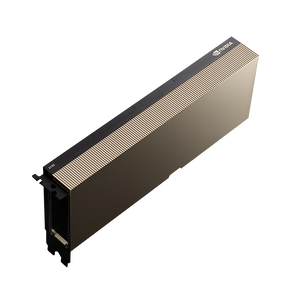

NVIDIA A30X and A100X

This device enables data-intensive edge and data center workloads to run with maximum security and performance.

The NVIDIA A30X combines the NVIDIA A30 Tensor Core GPU with the BlueField-2 DPU. With MIG, the GPU can be partitioned into as many as four GPU instances, each running a separate service.

This card’s design provides a good balance of compute and IO performance for use cases such as 5G vRAN and AI-based cybersecurity.

The NVIDIA A100X brings together the power of the NVIDIA A100 Tensor Core GPU with the BlueField-2 DPU. With MIG, each A100 can be partitioned into as many as seven GPU instances, allowing even more services to run simultaneously.

The A100X is ideal for use cases where compute demands are more intensive. Examples include 5G with massive multiple-input and multiple-output (MIMO) capabilities, AI-on-5G deployments, and specialized workloads such as signal processing.

The NVIDIA A30X combines the NVIDIA A30 Tensor Core GPU with the BlueField-2 DPU. With MIG, the GPU can be partitioned into as many as four GPU instances, each running a separate service.

This card’s design provides a good balance of compute and IO performance for use cases such as 5G vRAN and AI-based cybersecurity.

The NVIDIA A100X brings together the power of the NVIDIA A100 Tensor Core GPU with the BlueField-2 DPU. With MIG, each A100 can be partitioned into as many as seven GPU instances, allowing even more services to run simultaneously.

The A100X is ideal for use cases where compute demands are more intensive. Examples include 5G with massive multiple-input and multiple-output (MIMO) capabilities, AI-on-5G deployments, and specialized workloads such as signal processing.

PNY NVIDIA Data Center GPUs

NVIDIA® professional GPUs enable everything from stunning industrial design to advanced special effects to complex scientific visualization – and are widely regarded as the world’s preeminent visual computing platform. Trusted by millions of creative and technical professionals to accelerate their workflows, only NVIDIA professional GPUs have the most advanced ecosystem of hardware, software, tools and ISV support to transform today’s disruptive business challenges into tomorrow’s success stories.